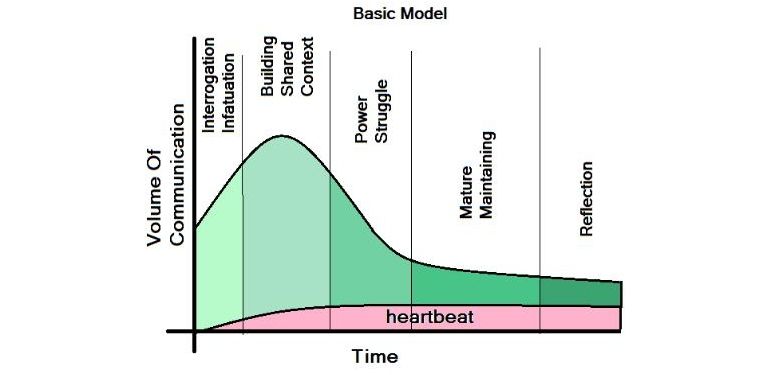

I wrote this "predictive communication model" during Thanksgiving of 2013. It measures changes in personal relationships through semantic analysis. For instance, two people may use "I", "me", "you" at the start of a relationship, which may evolve into "we", "us", "ours" over time.

Now add the latest AI hysteria, AI-induced psychosis, into the mix...

I read the headline that "OpenAI doesn't know how to stop it" and a solution seemed obvious to me. I fed a concept of psychosis detection to ChatGPT, along with my original design paper and it spat out an incredibly detailed design. The paper below is a simplified overview oriented towards AI psychosis but it should be equally applicable to both sides of a conversation. It builds upon Chat's existing semantic framework so it should be easy to add on, no retraining required.

Comments