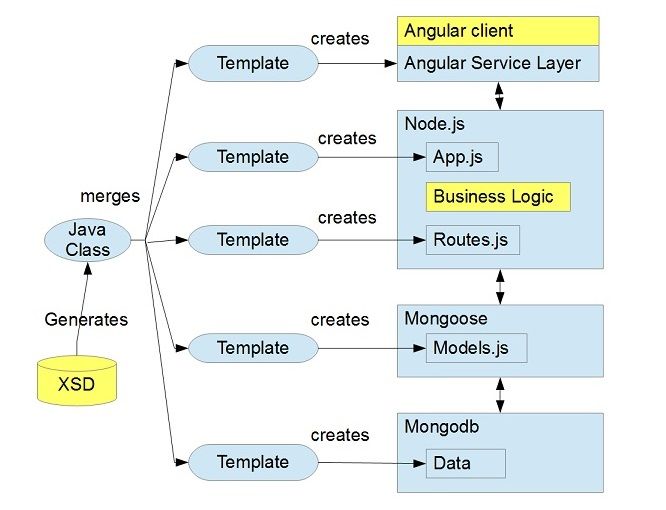

Prologue: I developed the Model-Driven Node (MDN) in 2013 as a Ruby Rails-style generator to create a commercial scale (SAP, ERP, etc) NodeJS app from an XSD schema document. I wrote it in four weeks and eventually generated a 1,000 table application which had extremely reliable code, executing 5,000+ man-hours of work in a few minutes. AI code generation is a similar challenge.

Javascript (and ergo NodeJS, TypeScript) have scalability issues due to their lack of compile-time type enforcement. Generated code (versus hand-coding) from my MDN was one way to bypass that limitation. But the job market chose a different direction, TypeScript, probably due to Microsoft's influence as its creator. But hand-coded TypeScript glued to JavaScript is still a cludgy solution and it looks like TypeScript peaked in adoption by 2025.

I believe TypeScript was a flawed solution and I'm 95% sure now that NodeJS hit a complexity limit a few years ago (which was my prediction in 2013) which fueled the emergence of its "Next.js" successor.

Now we're in the Age of AI with similar scalability/complexity issues due to non-deterministic behavior of AI code generation. We should use an AI-specific methodology to create maintainable apps with 99.9999% uptime, ie, critical demand apps like SAP. I'd bet big money that most of these "95% AI generated" codebases aren't maintainable and lack six "9"s of reliability. Most are probably generated ad hoc as a monolithic codebase, which was my approach with ChatGPT at first.

Methodology

The non-deterministic nature of generative AI means repeatable process becomes an obstacle in software development. So this post is an evolutionary (for now) AI-specific methodology plus job skills criteria.

create a static foundation referenced by GPT scripts.

these items are never regenerated:

- external REST API (or maybe GraphQL for more abstraction)

- JSON schema definitions

- SQL DDL

- create naming definitions for schema elements, APIs and class names which are referenced during generation

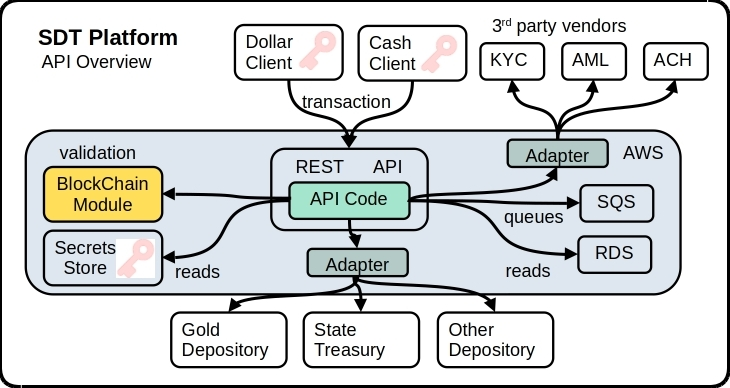

Decompose the app into an SOA service / modular diagram like this:

Changes to standard SOA methodology:

- More time spent upfront on API and JSON design

- More abstract API design so minor changes are absorbed by object definitions

- Much more thorough integration testing to detect minor differences

- Much less unit testing since you can't guarantee internal class behavior

tradeoffs:

- more abstract API means higher combinatorial factors in testing

- update via regeneration as long as feasible. Most generated code eventually drifts away from the generator's model and ability

ponder on versioning impact

confabulation and code generation

security risk - rules files

ensuring performance

reducing technical debt

https://m.slashdot.org/story/440517

AI memory constraints, "transactional" limit?

Comments